by Michał Cukierman

10 min read

by Michał Cukierman

10 min read

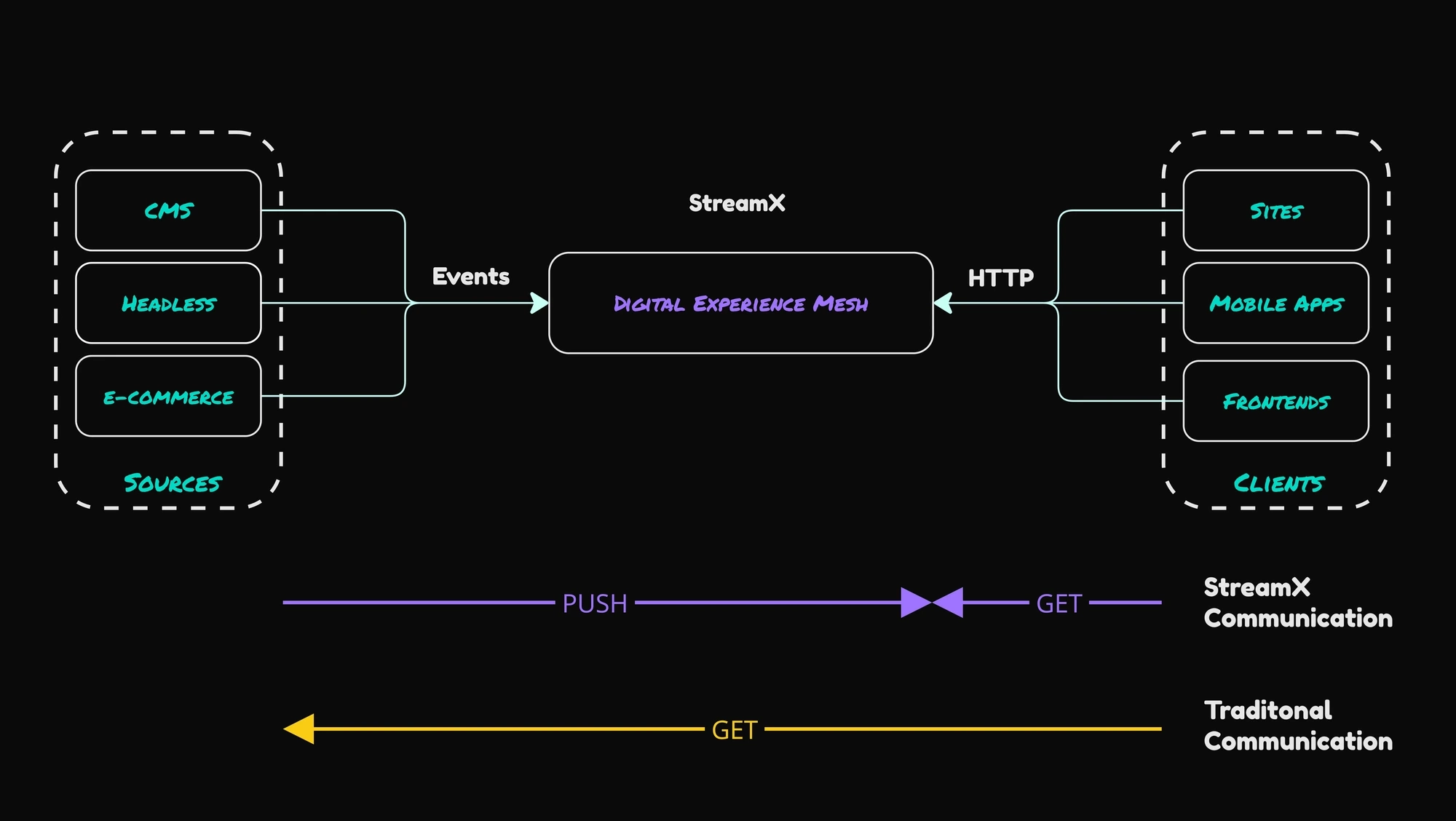

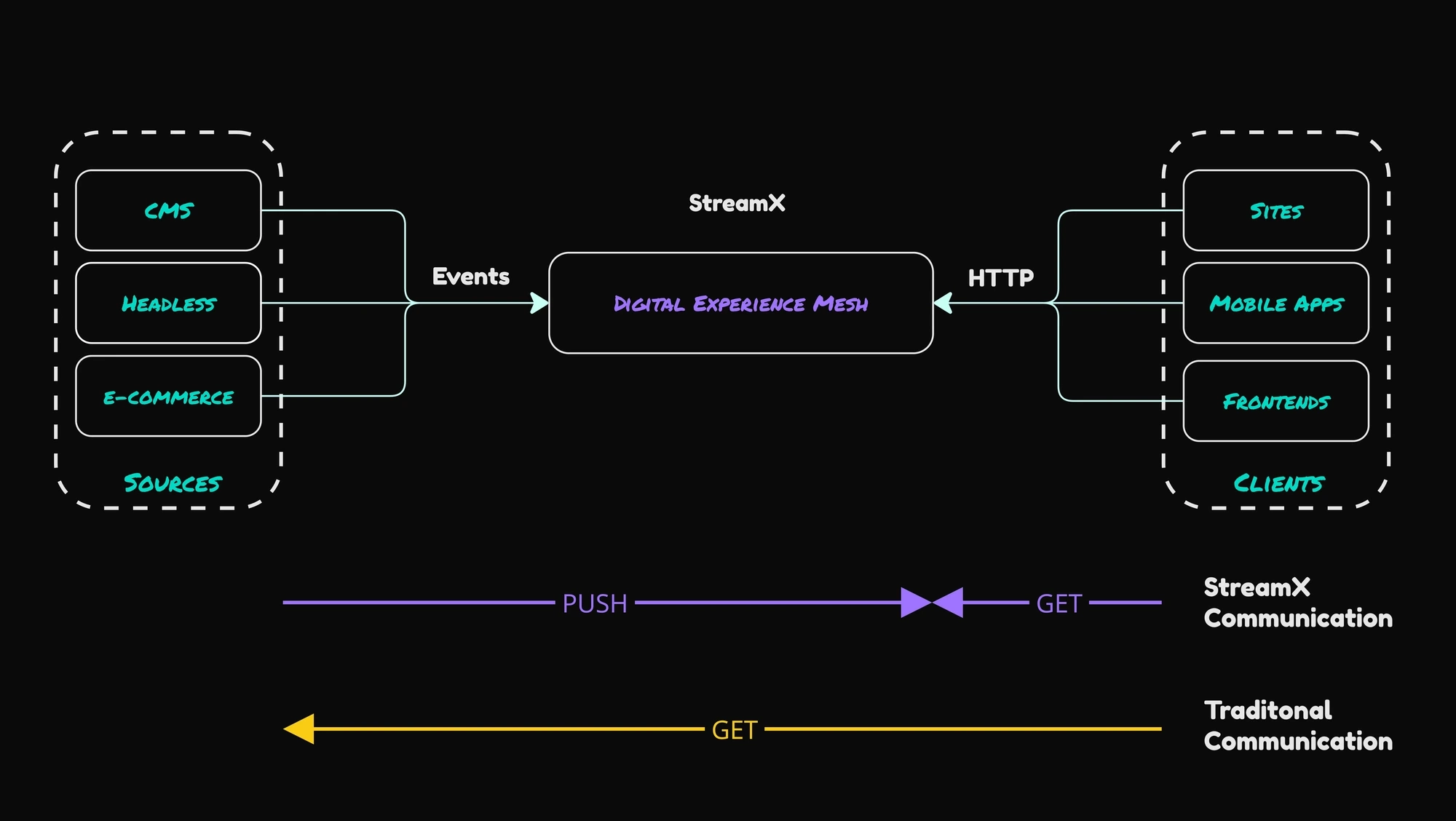

Digital Experience Mesh (DXM) is a new architecture paradigm and, therefore, requires some explanation. The architecture was created to address challenges arising during building modern Digital Platforms. It allows for achieving better performance, scalability, cost, and security benefits of the modern web, without the need for costly and risky replatforming. The architecture brings the composability concept to a new level.

Even though the Digital Experience Mesh is a new concept, it has a lot in common with other trending concepts or architectures, often appearing outside of the Digital Experience landscape. Some of them are:

Event Streaming

Microservices

Service Mesh

Data Mesh

Event Sourcing

Reactive Programming

Domain Driven Development

Data Processing Pipelines

Data Marts

The architecture is created for the modern web, therefore the primary communication methods are: messages and HTTP calls. The architecture is container-first, and is designed to work on any cloud, using available containers management platforms.

In the traditional approach, a CMS or e-commerce system is used for both: managing the data and delivering the experiences to the users. It’s hard for one, often monolithic application to play those two, completely different roles. Managing the content, i.e. pages or assets with limited number of parties (content authors, approvers, reviewers) involved in case of the CMS requires different system characteristics than delivering the content globally, with minimum latency, in a highly available, secure and scalable way.

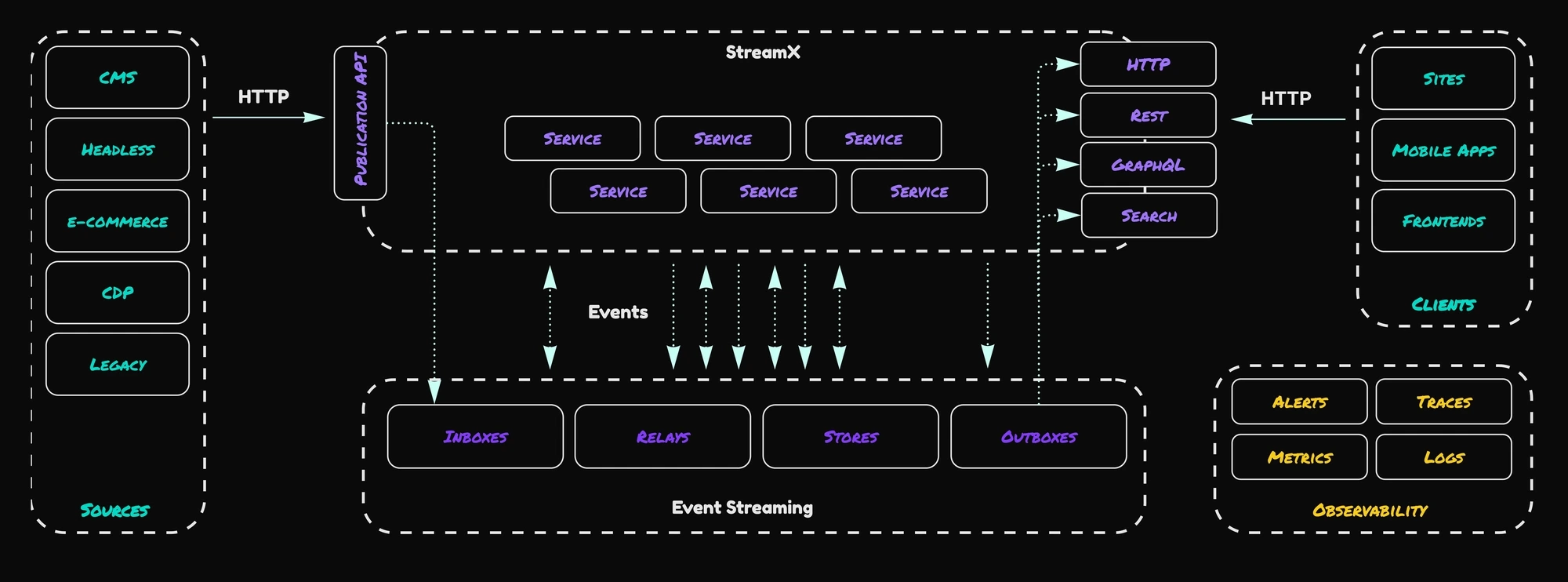

In StreamX, systems that govern the data are considered as sources. Most common systems that contribute to the final Digital Experience are CMS, PIM, e-commerce, CDP or legacy users APIs. Sources are responsible for informing about meaningful changes that can affect the final user's experience, by sending the event with relevant information. StreamX uses event streaming to perform digital experience choreography using Service Mesh and push the pre-processed experience data to Unified Delivery Layer, which is made of highly scalable, distributed services, located close to users for achieving best performance possible.

Ingestion of the experience data is realised through HTTP Publication API or native events (using protocols like Kafka, AMPQ or MQTT). Products of the Data Mesh are available using Rest API calls, or can take a form of PUSH notifications.

An important aspect of the architecture is that the experiences are pre-rendered (or pre-processed) and pushed to the Unified Delivery Layer using data pipelines. It allows us to shorten the route that the users requests need to travel and limit the number of systems involved in the request processing. End-users have no visibility of the Source systems or the StreamX data pipelines, therefore it only reaches the closest services available.

In more details, the Publication API is a REST service exposed to receive updates from Source systems. It accepts payloads through channels,each payload has to match registered Avro schema. Example channels may be pages data, template or assets. Two main endpoints are available for publishing and unpublishing event types. Publication events need to come with the key, which is most commonly mapped to a path used to access the web resource, or a unique key, if the resource is not available through the web. Once the event is accepted, it is published to Apache Pulsar, which is the event streaming platform used by StreamX. The event streaming layer acts as a nervous system of the mesh. It is a scalable, low latency and high throughput system. It quarantes high availability with data redundancy and multi-zonal (or multi-regional) deployments model.

The event accepted into Pulsar is firstly stored in a special type of channel within the inboxes namespace. Inbox channels are persistent with infinite retention. It means that we can always replay past events in case of the need (we can recover the state of the system to any point of time). It is especially useful when a team wants to introduce a new service (i.e. search that needs to index all published pages), such a service can read all the events it is interested in without the need of republishing the data again. Inboxes can be used to clone the environment without the need of copying all the data or can be valuable for disaster recovery plans.

All the events from the inboxes are read by the event-driven service mesh. This part is responsible for performing the experience choreography. Service Mesh is composed of multiple services which communicate with each other using Relay channels. Each of the services can use one or multiple Stores, which are local copies of events required by the service. There may be multiple service instances of a type, therefore Stores are synchronised using channels from the Stores namespace. Channels are backed by append only log required for building local, materialised views of the data. Channels in store namespace are persistent, with infinite retention, but are also compacted. The latter means that we are interested only in the latest occurrence of the event.

One of the main challenges when implementing event-driven service meshes is event order and delivery guarantees. StreamX satisfies these requirements, at the key level basis, for the latest events available. It means that the event may not be delivered if it’s not relevant (like outdated page update) and the order is preserved for a single page (i.e. page update events always come in the right sequence).

At the end of the data pipelines, experiences are stored in the Outbox channels. Data in Outboxes is, like in Stores - retained and compacted. Outbox channels are the final data products exposed to delivery services for consumption.

Data in inboxes, stores and outboxes are retained, therefore StreamX comes with data offloading. Old data can be moved to long-term storage, like an S3 instance or a network drive.

The last type of services are Delivery Services. Examples of services include HTTP server, GraphQL server, Search Engine or microservices serving dynamic data. Each of the delivery services has its own snapshot of the data, to ensure full horizontal scalability of this layer. Once a new replica of the service is started, it subscribes to Outbox channels and reads all the experiences it requires to be operational. This way scaling can be done automatically without worrying about the service state management. The services may be deployed in the separate clusters or on the Edge to ensure high availability and low latency for the end user. Delivery services, which are in-sync with the Outboxes, hold the unified data products from Source systems, therefore we refer to it as Unified Delivery Layer.

The design is secure by default. Users' access is limited to the Unified Data Layer without physical connection to the CMS, PIM, e-commerce or other Source systems.

Important part of the solution is observability. StreamX supports telemetry data (metrics, logs and traces) and alerting. By default, it’s configured to use OpenTelemetry and Micrometer. StreamX comes with Jaeger for trace visualisation and pre-defined Grafana Dashboards.

Note: Despite the fact that SteamX is deployed to multiple availability zones, and services work on multiple physical machines, end-to-end latencies are measured in tens of milliseconds (unless blocking operations or extensive processing is involved).

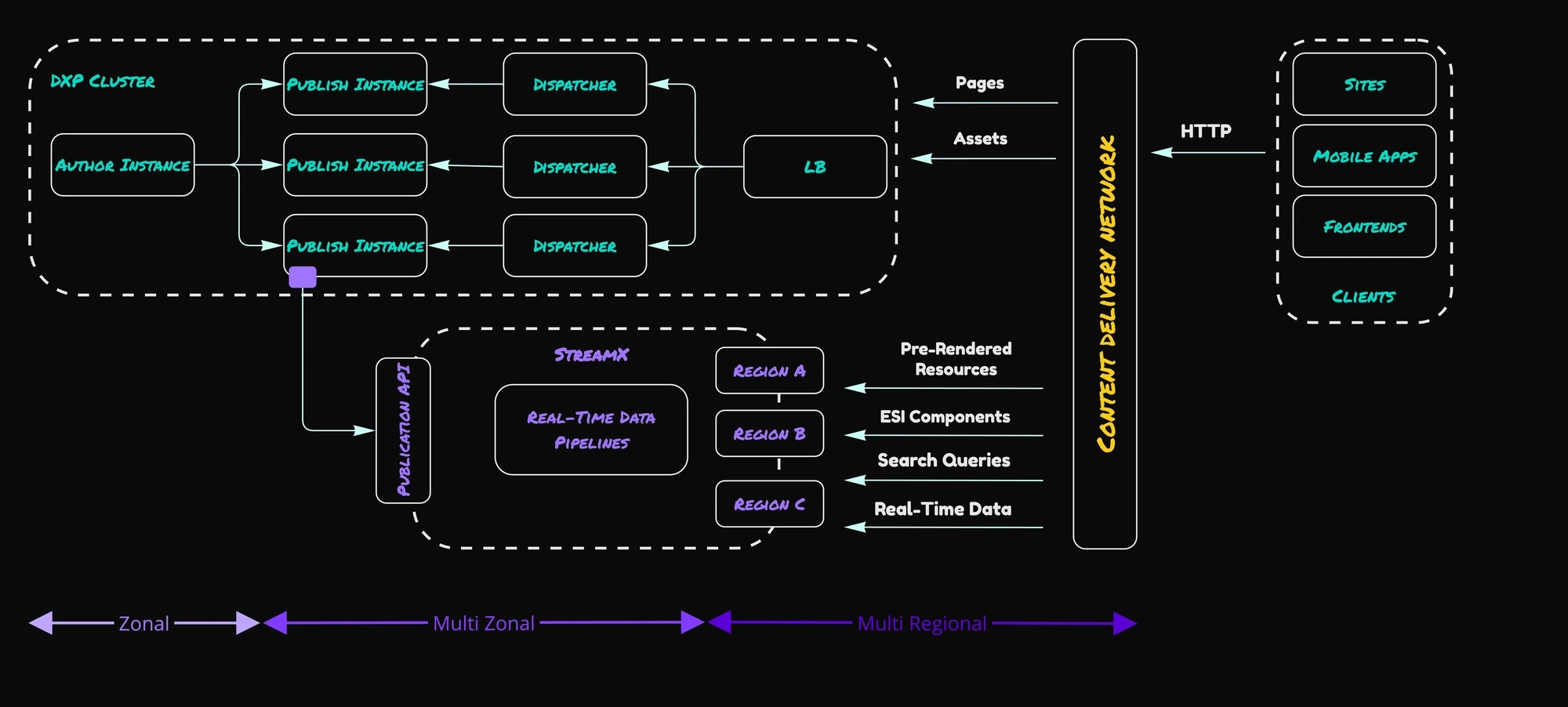

StreamX was designed to accelerate and modernise traditional DXP stacks. Therefore the true synergy comes from connecting it with existing platforms.

Traditional DXP is commonly composed of:

Single Author instance - dedicated for content authors

Multiple Publish instances - responsible for experience rendering

Dispatchers - HTTP caching servers

Load Balancer

Publish instances can be connected with Publication API with use of connectors. StreamX comes with predefined connectors, for example for Adobe Experience Manager or Magento. Publish contains the published state of resources, therefore it’s most common to use this layer. Publish instance notifies StreamX about every relevant change that may affect final customer experience.

StreamX can create multiple experience types that can be consumed by the DXP. In most of the cases it’s used to provide personalised experiences at scale with keeping low milliseconds performance. StreamX can support existing platform by providing:

Page Fragments accessible through Edge Side Include (ESI) or Server Side Include (SSI) tags. Usually it would be CMS components rendered by StreamX.

Pre-rendered resources, like sitemaps or mobile feed data

Search Experiences either included as ESI or using frontend

GraphQL service available for the frontend integration

All types of experience can be:

Pre-rendered - created ahead-of-time based on historical events

Produced directly on the edge based on additional User input (i.e. search result)

Having the diagram above, the CDN comes with the most points of presents (POPs), enterprise load balancers are available globally, StreamX delivery services can be deployed in multiple regions or on the edge, but usually the number would be limited to the geographical locations from which we expect most of the traffic.

Digital Experience Mesh offers a perspective of applying recent technological advancements to update and improve existing digital platforms, without the need to start over from scratch.

Curious to learn more? Follow Michał Cukierman on LinkedIn for StreamX architecture updates.