by Michał Cukierman

6 min read

by Michał Cukierman

6 min read

Traditional Digital Experience Platforms (DXPs) form the basis for many digital products like e-commerce platforms. They are typically content-centric, but also have the capability to integrate a variety of systems and services. However, monolithic systems that often introduce single points of failure, have low throughput and low scalability are, to put things mildly, not ideal for building an extensive digital ecosystem.

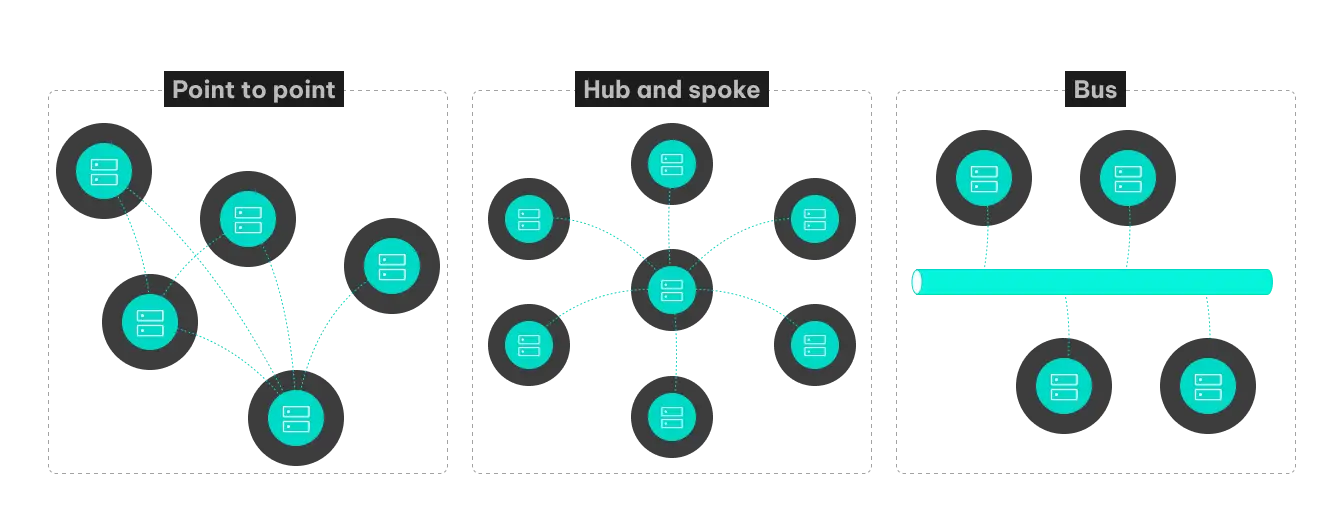

There are three major integration architectures: point-to-point, hub-and-spoke, and Enterprise Service Bus (ESB).

Figure 1. Integration architectures

Point-to-point integration: This method involves a direct connection between two systems, using either custom code or pre-built connectors. It is simple and straightforward for uncomplicated integrations, yet it becomes intricate and challenging to manage as the number of integrations grows.

Point-to-point integrations can lead to a tightly-coupled system, complicating any system changes or updates. Each integration must also handle various scenarios, such as network issues or system unavailability. Unfortunately, reusability is limited as the contract only exists between the paired systems.

Hub-and-spoke: This model features a centralized hub, usually a message-oriented middleware, which integrates with more than two systems. It reduces the number of required point-to-point connections, enhancing reliability and scalability. A lot of problems seen in p2p, like message re-delivery or support for exclusive message consumption, are solved by the hub itself.

The architecture is extendable and not tightly coupled, so it’s easy to add new or remove old systems to the hub. However, setting up and maintaining this approach can be more complex as it needs additional infrastructure and configuration.

Enterprise Service Bus (ESB): An ESB is a middleware that provides a central hub for multiple system integrations. Like hub-and-spoke, it often uses a messaging system and inherits most of its characteristics. The ESB handles message delivery, orchestration, routing, data transformation, and enrichment.

The ESB can also provide additional features such as message logging, security, and monitoring. This approach can simplify integration by providing a single point of control for all integrations. However, ESB setup and maintenance can be complex and expensive compared to other methods. It often requires a dedicated (in other words - expensive) team, which can slow down development due to the need for coordination.

Despite this variety, most of the leading Digital Experience Platforms (DXPs) rely on point-to-point (P2P) integration, which is typically established within the author or publisher component of the system. It can take different forms:

A service could be calling the author part and feeding it with data.

A service could be listening for an action in the author part and then start other actions (like cache flush).

A scheduled task could be bringing in data from an external source, processing it, and distributing it to publishers.

There could be a proxied call to an external service.

A service could be making a series of HTTP calls in response to a browser request.

The P2P approach shows its limitations when faced with large data volumes. This is the reason why, for example, if we need to update a large inventory on an e-commerce platform, DXP integrated with the Product Information Management system (PIM) will struggle to maintain high availability.

Read our use case on how StreamX can tackle a problem like this.

When there are multiple systems that need to talk to each other, using traditional DXP as a core of new Digital Products is, at best, risky:

Integrations with external systems that are costly and inefficient

Fragile platforms with multiple single points of failure

Introducing additional dependency on external services availability and performance

Increased platforms complexity, which results in increased maintenance costs

Missed opportunities, because of the time required to introduce new features

Inability to utilize all the data available in companies and organizations

Platform that cannot be extended with services requiring extensive analysis of a large amount of data like machine learning based recommendations, or web-scale personalizations based on user interactions and profiles

This is why traditional DXPs may not be the best choice for building complex digital ecosystems anymore. It’s enough for simple cases, but it’s easy to put too much responsibility on a system primarily created to work with pages, assets, tags, and sites.

There are systems that support advanced integrations, already widely used by industry pioneers like New York Times or Netflix. They offer wider capabilities but are not falling into any of the categories mentioned above.

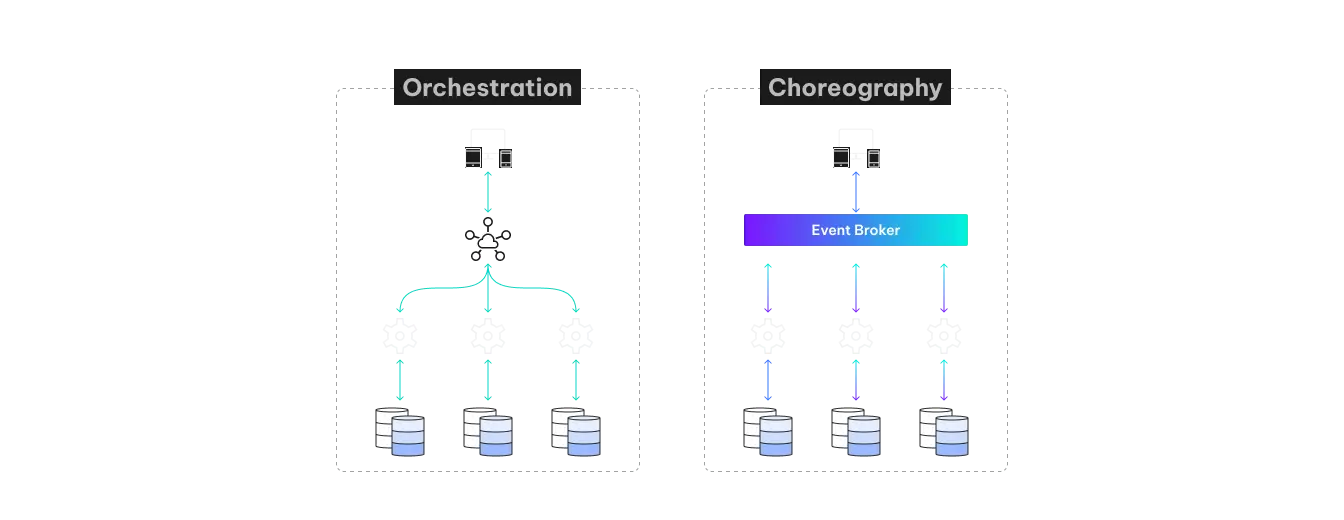

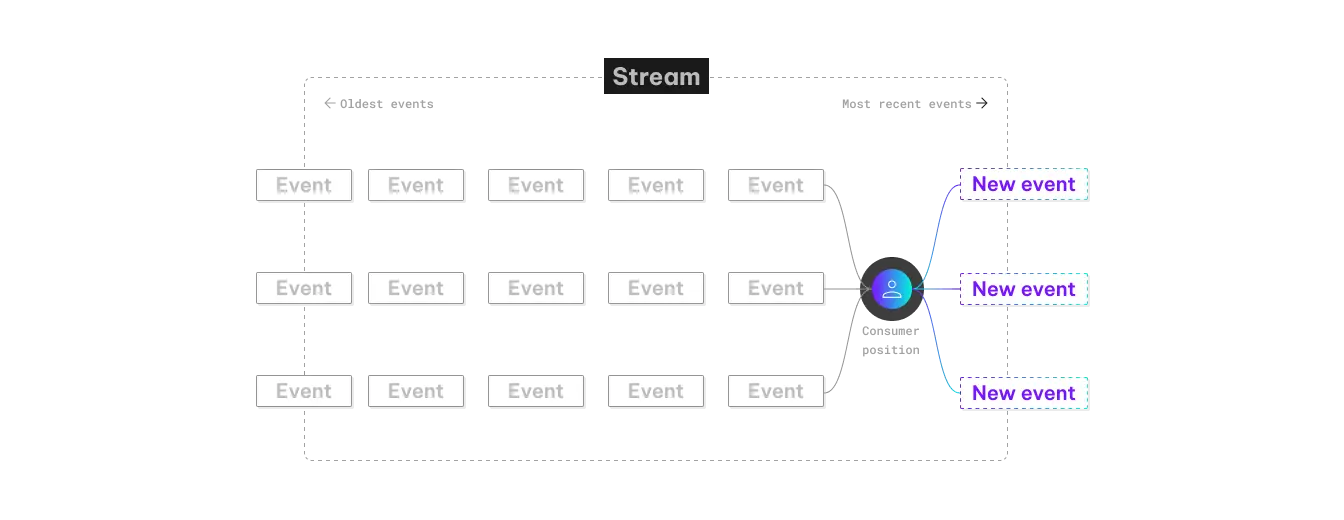

Distributed event streaming platforms are designed to support event processing using a publish-subscribe mechanism. Unlike Enterprise Service Bus (ESB) they don't use message orchestration, where the ESB is responsible for controlling the message flow between systems. Instead, message choreography is used. In this approach, each system involved in the integration independently handles sending and receiving messages directly to and from other systems.

Figure 2: Orchestration vs choreography

Without a central controller to determine the flow of messages, each system takes responsibility for appropriately responding to the messages it receives from other systems. Contracts are defined by topic name and type, consumption type, and event message format. Some platforms provide additional features, like transactions or query languages. Because of the lack of centralized orchestration, all of the platform's components can be distributed.

Platforms like that are highly available and can continue operating even if part of a cluster goes offline. Redundancy can be configured to ensure that there are typically at least three nodes of one type distributed across different availability zones. This structure allows the platforms to survive and (auto) recover from data center outages.

Distributing the system removes single points of failure and multiple brokers and partitions (naming can vary depending on the platform), allowing for linear scalability. Another key benefit is data retention, which means that the system's state can be replayed after a failure.

The ability to retrieve messages from the past makes it possible to introduce new integrations that will have access to historical data. Therefore, event streaming platforms are the perfect choice for creating real-time integrations that can handle large volumes of data.

Since messages are sent to the event streaming platform and stored in a persistent log that can be queried, we don’t need to depend on the source system availability. All the data is already available on our platform.

This setup allows us to take full advantage of the push model, where external data sources are only required when there are messages to send.

Figure 3: Logical view of persistent event log with 3 partitions

As we already stated, the data processing and synchronization across different systems is the most challenging aspect of integrating systems involving a DXP.

By adopting the event-driven integration approach, we are moving away from dependency on source system availability, making DXPs resilient and adaptable. This is a game changer that turns the limitations of traditional systems into new opportunities.

For companies that want to leverage all of their available data and extend their platform with sophisticated services such as AI-based recommendations, it’s clear that the future is event-driven. It's about staying ahead, innovating and always delivering the best possible user experience.