by Michał Cukierman

13 min read

by Michał Cukierman

13 min read

Over the past 20 years, the digital landscape has evolved to become an integral part of human reality. In many ways, the Internet has become the world's largest city - dynamic, constantly evolving and changing how people engage within its boundaries.

In this city, traditional Digital Experience Platforms (DXPs) could be compared to the road system—functional but congested, somewhat rigid, and struggling to keep up with the ever-growing demand.

Imagine a flexible highway system that’s ready not only for vehicles we know today, but also the ones not put on the market yet. It accommodates the introduction of new traffic sources without bottlenecking, integrates new routes and enables fast, smooth communication. This vision was what we aimed to achieve for the digital landscape with StreamX.

The StreamX's vision has been growing over the past 3 years, shaped and influenced by a team of talented individuals. We began by defining a comprehensive list of problems associated with traditional DXPs. Through an iterative process, we continued to refine our solution until we addressed all of these issues.

As we started to present our solution outside the company, we discovered that simply providing a platform to fix existing problems was not enough. That's how we came up with the idea of creating an Experience Data Mesh.

Through constant feedback from our team of 30 ds.pl developers, we were able to identify and effectively address the long-standing challenges faced by the Digital Experience Platform (DXP) industry.

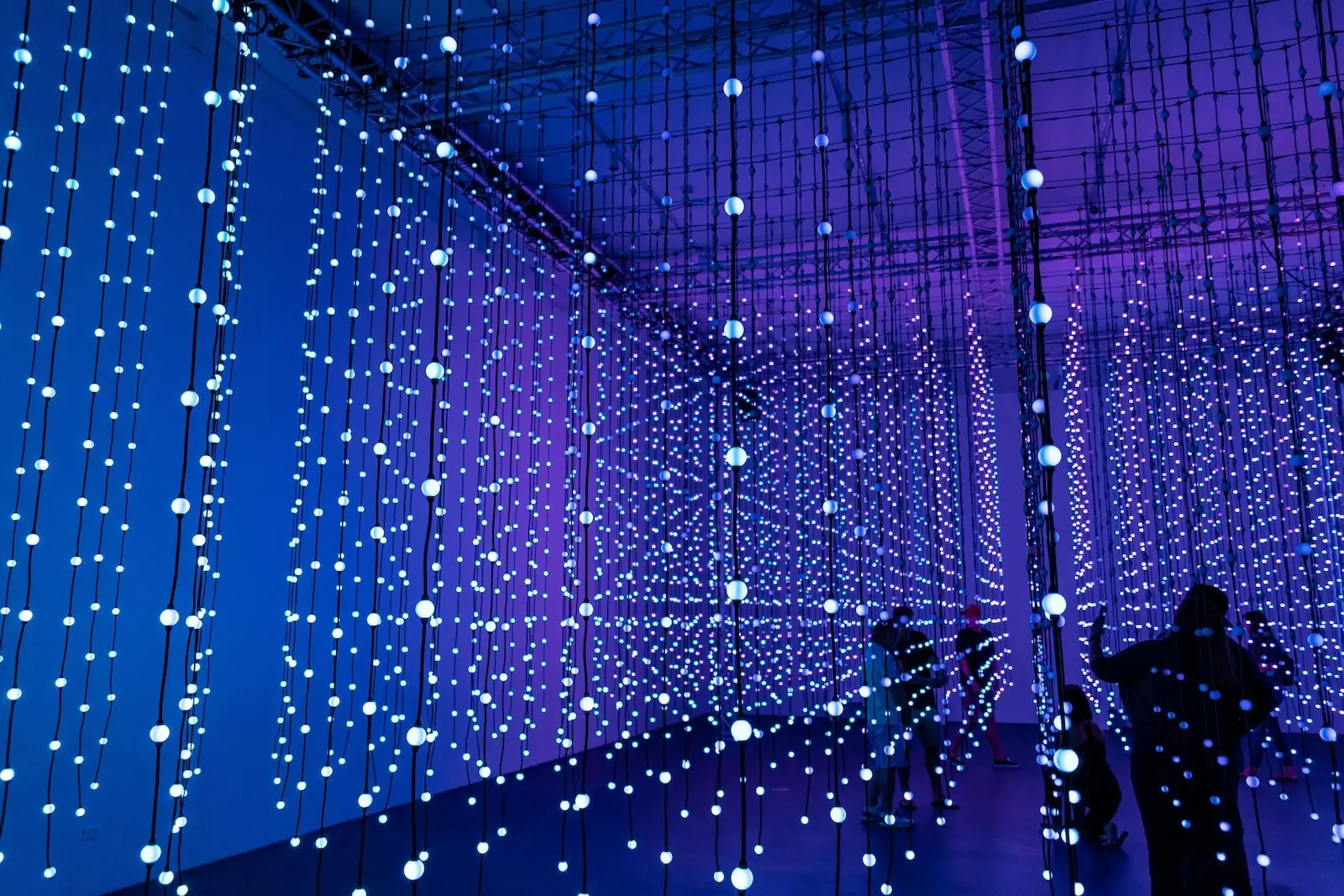

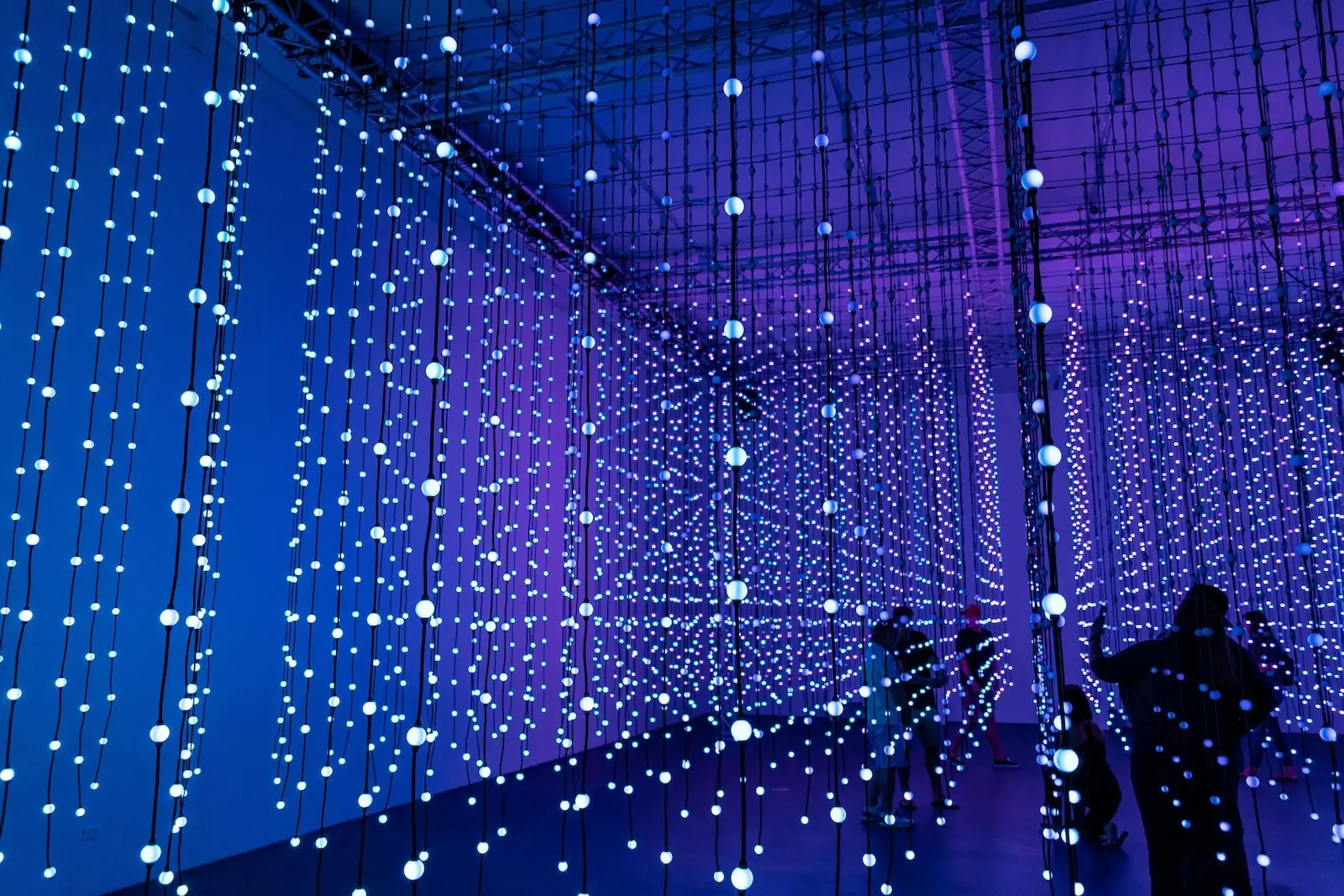

StreamX can be compared to an intelligent, adaptable and efficient highway system. It detangles content management, distributes content like never before, and delivers a high-speed, synchronized experience to the end user.

.webp/jcr:content/renditions/original.webp)

Figure 1: real-time DXP fundamental layers

All of this is built upon a cloud-native and composable architecture. By separating the content sources, distribution, and delivery into distinct layers, it fundamentally redefines the flow of content.

Welcome to the next generation of digital experience management, redesigned to keep pace with the ever-evolving digital world.

StreamX uses event-driven pipelines to gather data from various sources and transform it into digital experiences ready for lightweight global delivery.

Accordingly, the StreamX Experience Data Mesh is anchored on three foundational layers:

unified sources - systems feeding the platform with the data using standardized messages

smart distribution - platform creating experiences from incoming data in real-time

lightweight delivery - services for efficient and close to the clients experiences delivery

Each one plays a crucial role in defining the platform. In the upcoming sections, we will go through problems StreamX is resolving, the design core concepts, and go through the most significant features of the platform.

.webp/jcr:content/renditions/original.webp)

Core concept:

The content management system (CMS) should be used by content authors to feed content into the platform, but the CMS itself should not be responsible for all aspects of the platform. By separating the CMS from the central point of the digital experience platform (DXP), companies can have multiple sources of content and data, integrate various APIs and services, and benefit from a loosely coupled architecture.

We've reimagined the role of the CMS as a dedicated content source, focusing on its core strengths: content creation and publishing. The content itself become independent once published.

By transforming the CMS into a decentralized content source, we've opened the door for different types of content and data to flow through a message-oriented architecture. In StreamX , systems communicate asynchronously, allowing any system to pass data to the DXP, provided it sticks to a pre-defined message contract.

For organizations that have already invested in centralized DAM systems or have other CMSs, StreamX’s architecture offers a significant advantage, eliminating the need for costly migrations. Teams can stick with the systems they know, and the systems only need an adapter to talk to the DXP. APIs or services like PIM or CRM can be integrated into StreamX to enhance end-user experiences.

Thanks to contract-based integrations, adding new sources or replacing old ones is easier than ever. StreamX ’s loosely coupled architecture enables companies to use the right tools whenever they need them.

.webp/jcr:content/renditions/original.webp)

Core concept:

Distributed event-streaming platform, offering low latency, scalability, and intelligent distribution, is the most optimal way to achieve seamless integration and enhancing the delivery of experiences.

StreamX ’s biggest game changer is an event-streaming platform that we used to replace publisher-based delivery. It can manage large amounts of messages and data with low latency. The event-streaming platforms that we use made it possible to process millions of messages and gigabytes of data per second, on a mere three-node cluster. And unlike the centralized messaging systems, this platform can be scaled to hundreds of nodes.

The event streaming platform is the backbone and a brain at the core of our DXP, generating, optimizing and enriching experiences with data from incoming content source messages.

With the distributed pub-sub, we decided to replace traditional request-reply with an event-based push model. StreamX stores the data from incoming systems within the persistent logs and makes it possible to replay the events or read the historical values without the need of communicating with the originating system.

As a result, content and data sources are no longer runtime dependencies for the experiences to be delivered. CMS, PIM, or DAM downtime won’t affect the platform availability anymore.

Unlike in Static Site Generation (SSG), our model uses the persistent log's data to create experiences intelligently.

For example, if new product data from the PIM arrives, StreamX pipelines generate the corresponding product page. If global price changes are made, StreamX will regenerate all product pages, leaving CMS content pages unaffected.

With StreamX “smart pipes” we always generate as much as possible ahead of the time, and push the pre-generated experiences to the next phases.

StreamX ’s platform keeps a local persistent log, so we can plug in the extensions that can be applied to existing content without the need to re-publish everything. This means that we could, for example, add a new search module that creates search indexes reading the log's product, page, and DAM data.

With high-throughput and smart distribution, StreamX ’s system naturally serves as an integration point. Each broker node on the platform processes and transforms messages, eliminating single points of failure common in centralized messaging systems like JMS.

StreamX utilizes the concept of message choreography, simplifying integration for teams. Product teams can now create their own deployments without having to rely on the integration team, as it often happens with the Enterprise Service Bus (ESB) integrations.

.webp/jcr:content/renditions/original.webp)

Core concept:

Implementation of a lightweight delivery layer, powered by a smart distribution system, enables fast, cost-efficient scalability and flexible deployment options. The delivery layer can then act as an intelligent CDN, providing low latencies, immediate updates, and the ability to serve dynamic content.

The smart distribution system generates experiences with most pages pre-rendered, assets optimized, and search indexes ready to use. These experiences are stored in a log, ready for synchronization with new services. If we scale a service, for example, by adding a new HTTP server, it fetches all the available experiences automatically and subscribes to the new ones. This is a final step of a multi-stage pushing process, that ensures all services have the necessary data for efficient delivery.

StreamX ’s lightweight model, automatic synchronization and a limited service responsibility, allows for rapid, cost-efficient scalability. It's flexible too; redundant services can be shut down whenever they are not needed.

Lightweight services can be deployed across regions or even on the edge, ensuring low latencies as each service is located near end users and has local data access. This setup functions like a CDN, but with extra advantages.

In StreamX , all platform events immediately trigger changes in the service state, maintaining full resource control. Thanks to the push model, StreamX doesn’t need to be flushed like the CDN, as the delivery layer is not a cache anymore.

Another significant advantage over CDN is that experiences are available before they're consumed. The end user receives responses from the delivery layer from the very first request, and the risk of server crashes that typically follow cache flushes gets eliminated completely.

StreamXis not limited to static content. With the event-based push model, we can generate experiences that in other platforms would be dynamic. An example could be a flight booking page, on which price changes are triggered by a backing algorithm using demand, interest and available seats.

In traditional DXP or in MACH architecture, the prices would need to be fetched dynamically everytime the client visits a page. In real-time DXP, we prepare the page with actual prices before it’s accessed, and keep it consistent based on incoming events.

However, there are cases when experiences cannot be generated ahead-of-time. Such an example would be a search result page. We cannot generate search results, because we don’t know what the query is.

In such examples we create semi-products, like optimized search feeds and put it on the delivery layer for specialized and fast delivery. Requesting such a page requires a fallback to the standard request-reply model, but we make sure that it’s used only when needed.

CDN can still be used with StreamX, but it will act more like an add-on rather than a component critical for normal site operation.

.webp/jcr:content/renditions/original.webp)