by Michał Cukierman

20 min read

by Michał Cukierman

20 min read

Successful AEM architects know that to create performant sites in AEM, it’s necessary to leverage the cache.

A site with cached content is fast, it is easy to scale, and provides consistent performance under load. It often allows us to move the content closer to the client, which additionally lowers the requests’ latencies. However, implementing the right caching strategy often proves to be challenging. The first decision is to state what should be cached on the Dispatcher, what on the CDN, or finally in the user’s browser. Second, how and when to invalidate the cache, or how to control the caches using TTL and HTTP headers.

However, even with an elaborate caching layer, it’s not possible to cache all types of content. Components using live data or personalized experiences require on-demand response generation. Most of the sites contain dynamic and static parts, which have to be served using different techniques. It is a challenge to mix resources with different characteristics, static and dynamic, to provide a personalized user experience while keeping high performance at scale.

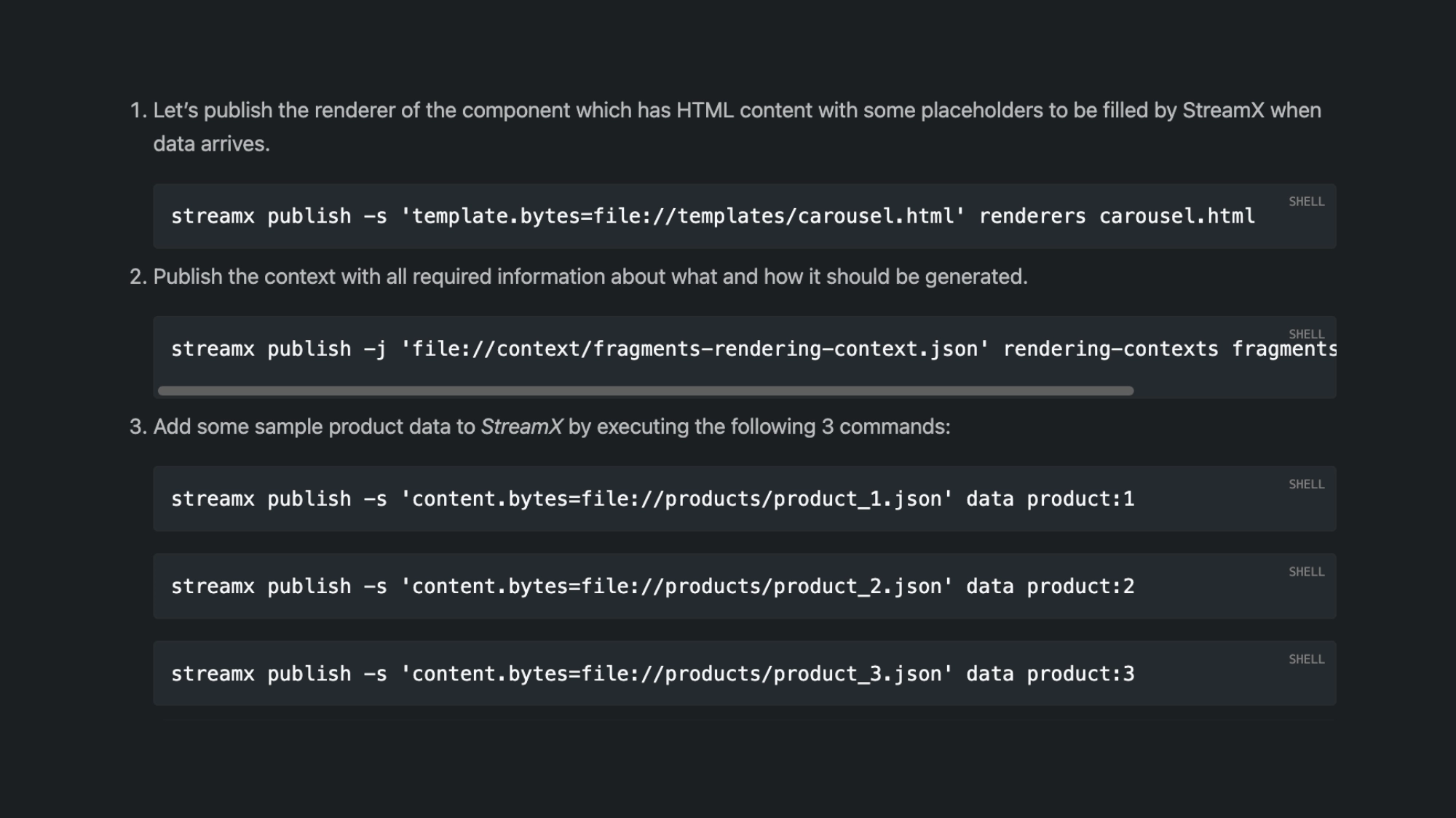

Let's divide the resources into four categories:

Static Resources: Immutable resources like frontend assets, images, or videos. The content of this type can be easily cached on CDN or even in a user browser. Caching is controlled by HTTP headers and is persisted with a long TTL value. It’s important to create a unique URL for each of the resources because the URL will become a caching key. By convention, such a URL includes a version identifier or a hash. Access to these resources is fast, and the client rarely requests it directly from the Publish instance.

Published Content: This is the content that is controlled by the content authors using publications. Content can be updated anytime or unpublished in case of the need. This is probably the most common type of resource managed by AEM. Pages, Assets, Tags, or Fragments fall into this category. To improve the performance and scalability of the sites, without losing control over the data relevancy, AEM comes with the Dispatcher module. Dispatchers may cache the resources as files on disks, which results in high speed and stability of the site. The dispatcher cache can be invalidated manually or using an AEM flush agent. Invalidation, however, is not a trivial process, sometimes pages depend on other pages or resources and should be invalidated whenever the rendering of a page changes, and not only the page data itself. To address this, the cache flush can invalidate the whole site or its subtree.

Data Driven Components: The first category of dynamic pages, that depend on source system data, but not on the user request or a session. It contains the data that changes periodically but can be shared by all or a group of site visitors. Example cases could be current stock exchange quotes and updates for products during promotions or before important events. Another interesting example may be a page with live updates, for example displaying results of the competitions run on the Olympic Games. The data may change every second and has to be presented to a large group of clients at the same time. This category of components is especially important because companies already possess data they could leverage to create more engaging experiences. Unfortunately, this type of page cannot be cached, as it is frequently changed, caching this type of content would result in stale data.

Request Scoped Components: Traditional dynamic components that are rendered in response to the user request. Example components are products recommended for a particular visitor, or search components that display results in response to the query. The components of this type are not cacheable for a couple of reasons:

the response may contain information related to the requesting visitor, like the username

the results may be tailored just for the one that requested it and may not have the same value for other users (for example recommendations)

the cache hit ratio would be low because the variants of parameters sent with the request are large (for example search query)

Due to the above, the request-scoped dynamic components are usually rendered by the backend systems.

Web resource classification

These four categories are created only for the purpose of this article, therefore they do not cover all possible cases. It’s worth noticing that the last two categories are often mixed with each other - the components can be both, data-driven and tailored for a certain visitor. The goal is to create sites that are performant and stable like ones using cached content while being able to deliver personalized content utilizing the data owned by the organization.

Organizations that want to get ahead of the competition need to learn how to deliver engaging, personalized experiences at scale. Ones who stay behind risk reduced customer engagement and lower conversion rates, additionally, they may experience increasing customer churn, and by not fully leveraging their data they risk rendering their marketing efforts ineffective.

The Digital Landscape evolves fast, and there are more possibilities every year, the challenge is to leverage the data and technology:

Companies have access to more data from a growing number of systems like CDP, PIM, e-commerce, or internal systems.

With the boom of Large Language Models (LLM), the data can be processed without big operational efforts.

Cloud computing is a de facto standard in the enterprise world, giving us new approaches for achieving scalability and performance.

Creating personalized experiences that use data from across the organization is just one part of the equation. With the growing usage of the internet and the number of channels the visitors use, the site needs to operate at scale. Slow, inaccessible services that struggle with traffic may cause revenue loss and affect the brand’s reputation. Achieving the goal may be even more difficult for organizations operating globally, where fast access to the site has to be ensured for every geographic location.

Static resources are published and can be cached in CDN and the user browser. To keep it simple, each new deployment should enforce the change of updated assets’ URLs. It should contain a version identifier, or a hash because the URL is used by caching servers or browsers as a caching key. In an ideal scenario, the cache of this type should never be invalidated, as the versions of resources used by the page are declared in the HTML, which defines the contract.

In general, this type of content is easy to scale and due to the usage of CDN delivers consistent performance with low latency. One drawback is that the first request that misses the caches needs to reach out to Publish instances, which can be slow for some users. This can be addressed by the cache warm-ups performed after each of the deployments. Nevertheless, the cache misses in this scenario are rather theoretical, and AEM can serve static resources at scale and with good performance.

Published content like images or pages should be cached on the dispatcher. It allows us to keep control over the content lifecycle while preserving relatively good performance and stability.

Dispatcher instances can be added to handle more load, which is relatively easy to do. It’s possible to deploy dispatchers into multiple geographic regions. It is possible to further optimize the cache performance by letting the content be stored on CDN. The cache can be invalidated by a short TTL setting or a custom CDN cache flush agent. The control over the version delivered to the user is not so granular but could be acceptable for some of the customers.

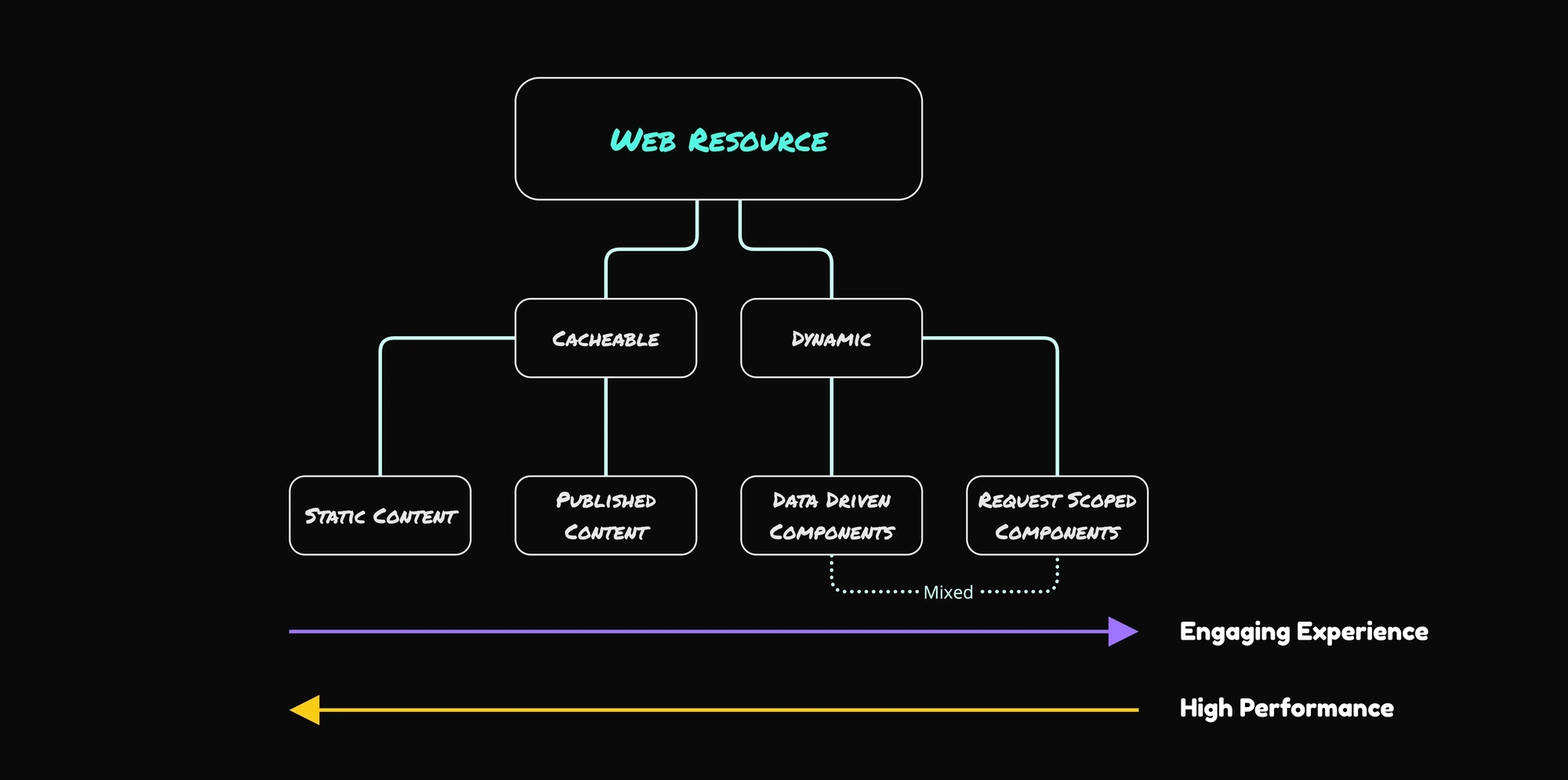

The drawback is, again, the cold-cache issue. It is more visible here because the cache flush happens after every publication, not only after deployments.

The issue may not be relevant for the sites having a small number of content publications, a limited number of pages, and high traffic. Nevertheless, it may require addressing it for the sites with opposite characteristics - large amounts of content updates, a relatively large number of unique pages, and low traffic. Users may not be able to populate the cache using normal traffic, and this results in a low cache-hit ratio. We can, once again, implement a cache warm-up mechanism after the publication. Note that in this case, the right implementation may not be trivial, to not end up in the situation where 90% of the site traffic is generated by warm-up scripts. Properly building a sitemap could be also helpful in achieving this goal.

Apart from the cold-cache issue, which may be problematic for a specific group of customers, AEM handles this type of content well, it is again a scalable, fast, and stable solution.

Data Driven Components are probably the most problematic. They cannot be cached, so CDN and dispatcher cannot be used. AEM is in this case not the source of the information that triggers the change. It is most usually the other systems like PIM, e-commerce, or legacy ones. What’s the AEM role here? In most cases, it controls the site structure and the page layout, on which the data-driven component is used.

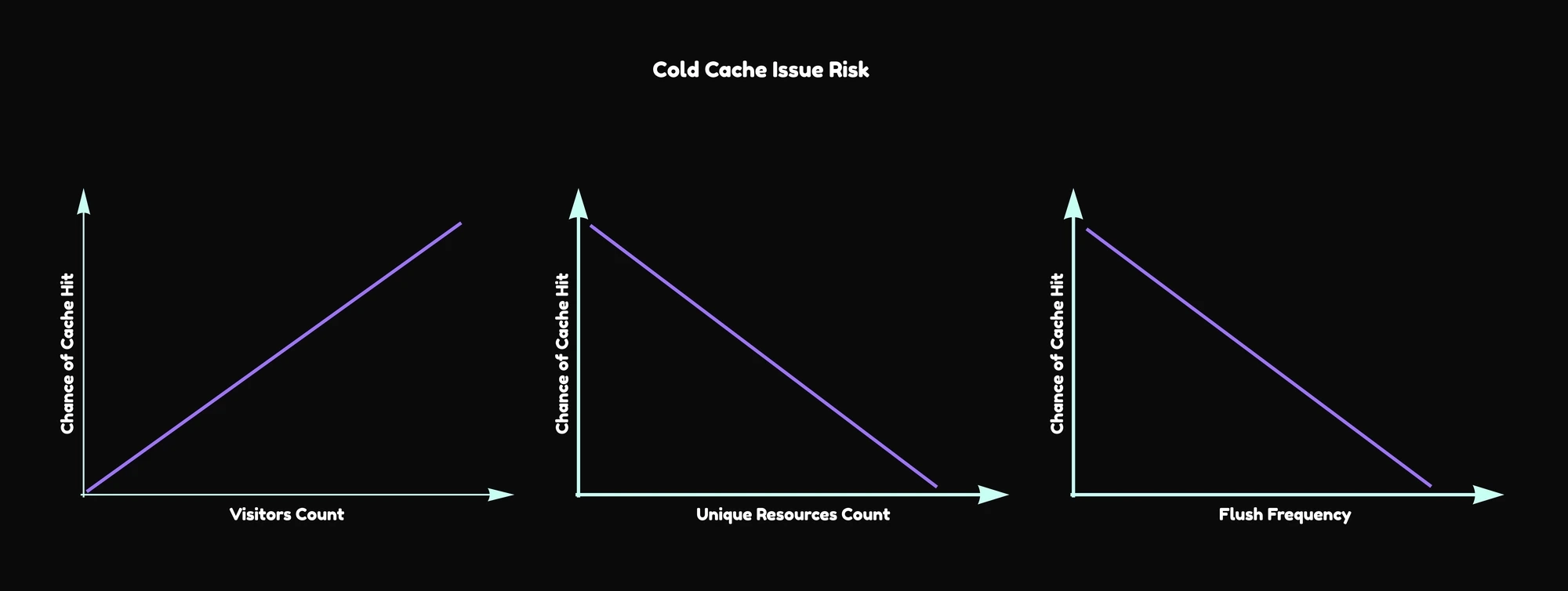

Official AEM recommendation states that AEM Publish instance can handle 40 requests/second of constant load. (3,5 million / 86400). Source

Let's take an example of accurate stock availability information on the product detail page. The data with the availability of a product may come from the e-commerce system, which usually can expose the data using an HTTP endpoint.

The common ways to embed it in a page are to:

Use AEM as a render - product details page request goes to a Publish instance which contains the information about layout and the embedded component with stock availability information. Next, the Publish calls the e-commerce to fetch the stock data and uses the response to produce a rendition of the component, and eventually, the page.

Use Frontend to render a component - AEM provides the component and the frontend code, to defer fetching the data from e-commerce - this solution is similar to the first one, because the page and site structure are still controlled by the Author instance, but the rendering of the component is delegated to the users’ browser. It improves the initial page load, but we need to call two separate systems to render the complete page. Network quality between the client and all two systems becomes an important factor.

There are of course other possibilities, but most of them share the same concept - to make a page with the relevant prices the calls to AEM and e-commerce are required.

The list of potential problems:

Scalability: If any of the systems do not scale, the entire solution won’t scale. This is especially problematic when there are slow, monolithic, legacy systems involved.

Performance: There are always two requests that have to be done, so the network latencies and time required for processing the request are doubled

Latency: It’s impossible to replicate all source systems in different geographic locations

Availability: If any of the systems are down, or the systems cannot communicate for some reason, the page won’t be rendered correctly

The example with the price data is very basic, but it is not hard to extrapolate to a case where there are more source systems involved, like reviews, PIM, recommendation systems, and so on.

It’s fair to say that AEM does not address the data driven components problems.

NOTE:

There is also a practice to load all the data into the AEM repository, using nightly batch jobs. It’s still a fairly common technique, resulting in the external data becoming published content. Unfortunately, it works only if the customer accepts the fact that the site may contain outdated information and may lead to an architecture where the AEM repository and publication process become a bottleneck.

Request Scoped Components are handled in AEM by Publish instances, where the Java code is run to collect the data, process it, and render the response to the end user. The performance and scalability of such components are moderate, noticeably slower than cached content. Publish instances are not easy to scale and it may be hard to create cross-regional deployments.

Publish instances may not perform well under high load, therefore it’s not recommended to overload them with dynamic, not cacheable components.

As a result, the Publish instance may be a good choice for providing dynamic components for sites with moderate traffic, where the performance is not critical. In other cases, the common approach nowadays is to use fronted components that call microservices to provide dynamic components to the page controlled by AEM.

Request scoped components

AEM handles static and published content well. It can also be used to deliver personalized, data-driven experiences when data relevancy, scale, and performance are not a key factors. Nevertheless, it’s clear that its architecture evolved from a CMS, therefore its architecture suffers from the same limitations that other CMS’es do. It was created primarily for managing and publishing the content, giving the content authors and marketers the best tool to do their work. It’s often not sufficient to solve the challenges of the modern web.

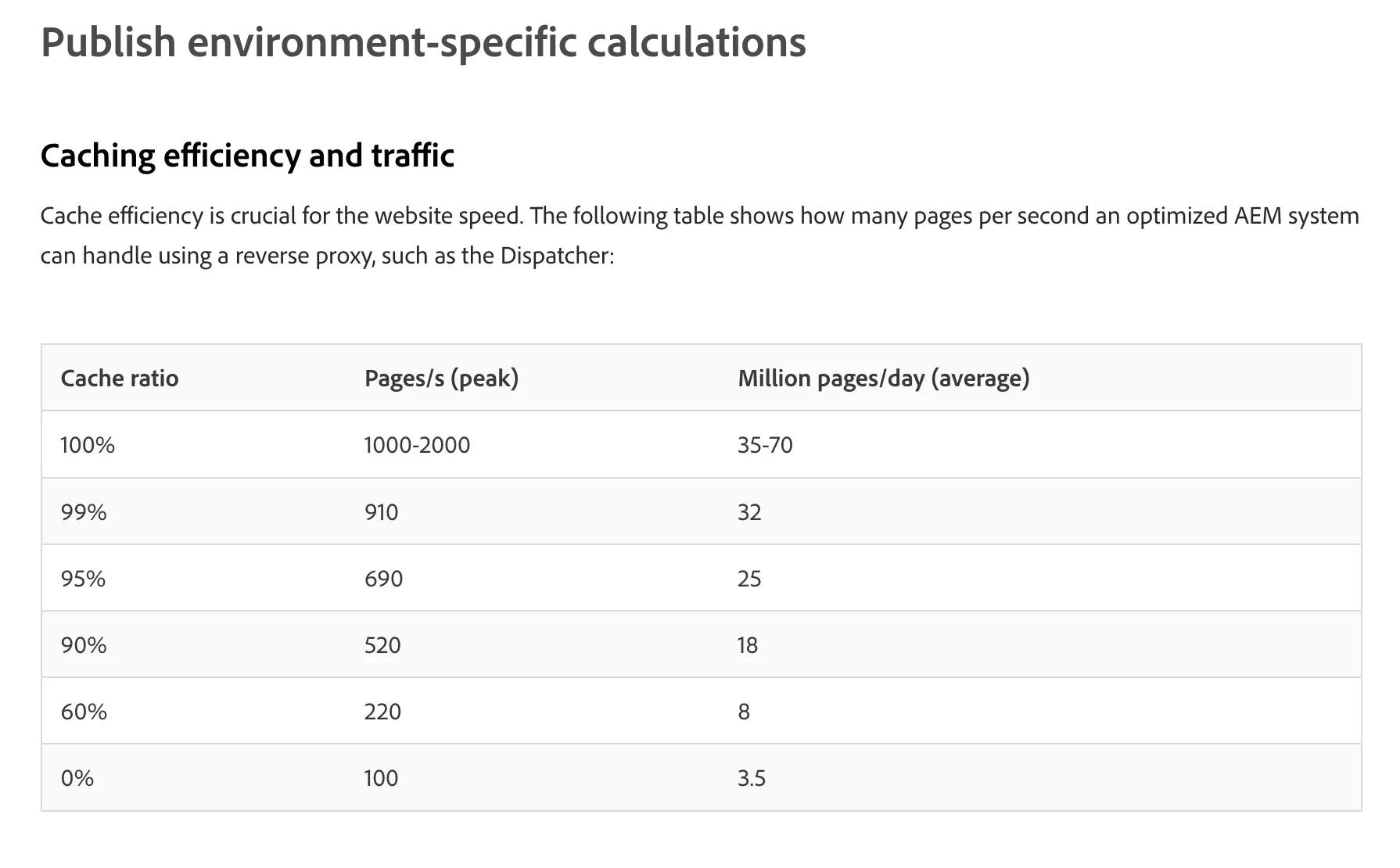

StreamX aims to bridge the gap between AEM's content management capabilities and the needs of modern digital experiences. It gives the architects the possibility to deliver data-driven and dynamic content at scale and performance comparable to delivering static content.

Digital Experience Mesh was designed from the ground up to address two challenges:

processing DX data pipelines from multiple sources with low milliseconds end-to-end latencies in a scalable way

delivering DX experiences using geographically distributed, high-performant services that can scale rapidly

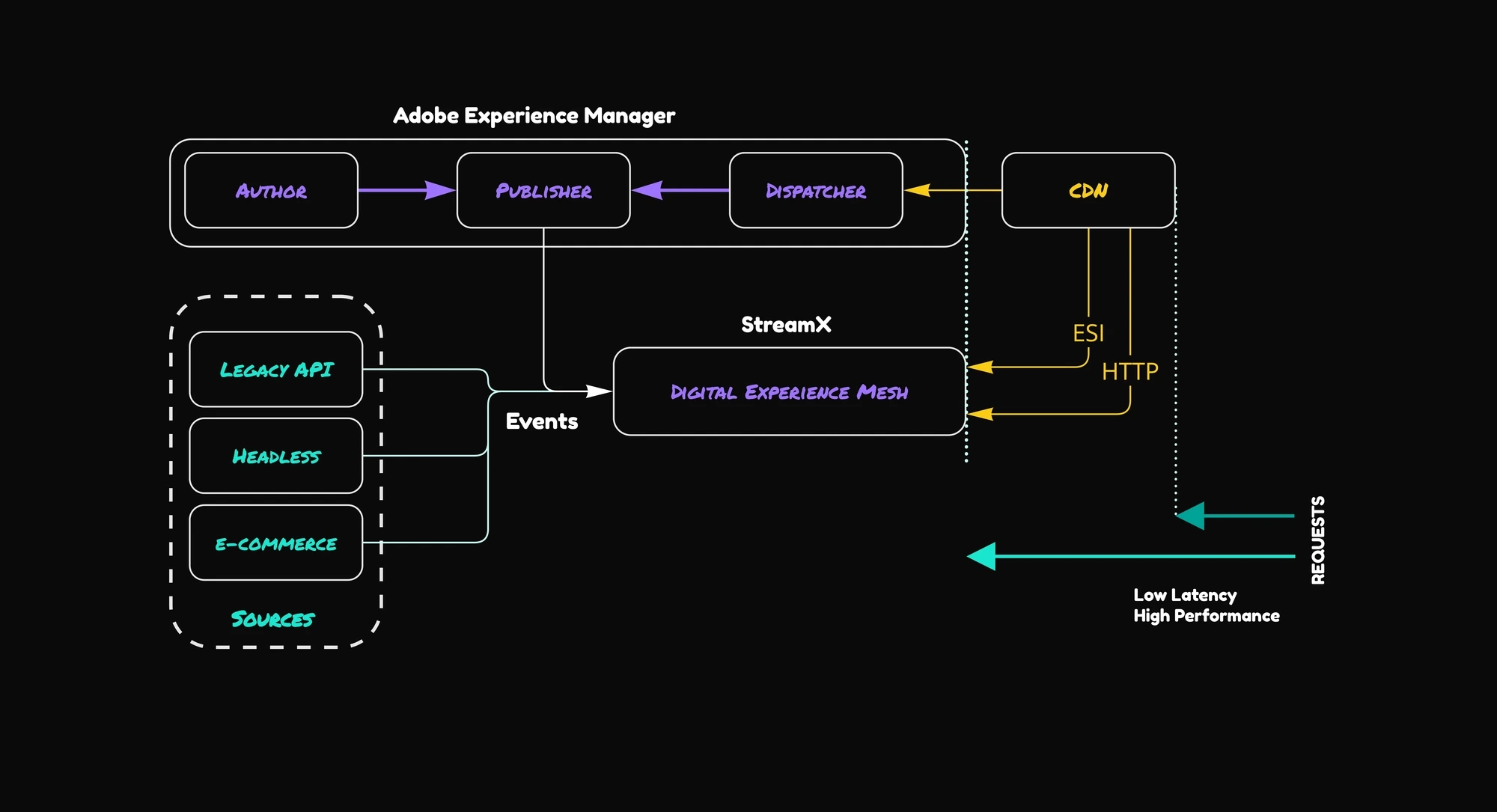

Both goals were achieved by employing event-streaming, Service Meshes, and modern techniques similar to ones known from Data Meshes. Instead of asking the source systems for the data, it listens for the updates in the form of events.

Every event containing relevant information triggers event-driven processing services to create experiences ahead of demand.

Digital experiences are delivered to the end customers using the StreamX delivery layer - made of highly scalable, specialized services placed close to the clients for fast access. All the delivery services create a Unified Data Layer, a single source of truth for experiences that can be reused by multiple channels.

The common experience types StreamX delivers are pre-rendered components, pages, frontend components data, or services like live search service.

DX architecture involving AEM and StreamX

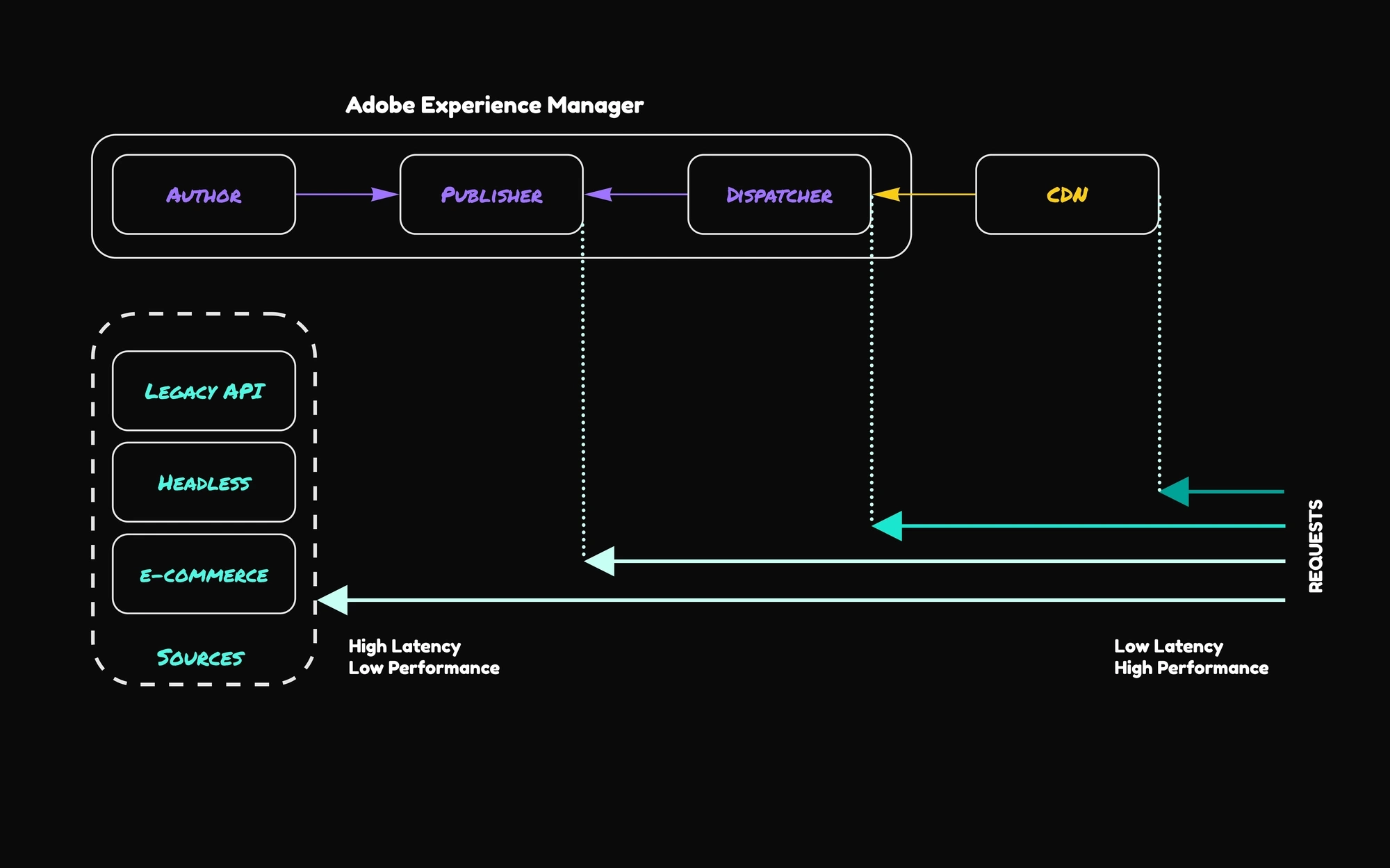

StreamX differs fundamentally from request/reply models heavily depending on caches. In the traditional model, the request made by the client traverses down to the source systems like AEM, PIM, or e-commerce and to achieve performance at scale, the results are cached on Dispatcher, CDN, or in the browser.

StreamX listens to updates from source systems like AEM, PIM, or e-commerce and triggers data pipelines to create the experience and push it to the service close to the client. In this model, the updates from the source systems form an infinite stream of events that are processed infinitely resulting in an always-changing and always up-to-date Unified Data Layer.

We know that cacheable content already works well using the Publish/Dispatcher/CDN setup. StreamX can provide pre-rendering to solve cold-cache and first-rendering issues.

In this case, StreamX would act like a pre-renderer for AEM pages that are triggered using content publication events. It is similar to what Server Side Rendering is for the front end, but happening in real-time, on every change in a source system.

The real power of DX Mesh lies in the ability to serve Dynamic Components and services that work at scale, with high performance.

How StreamX solves the Data Driven Components problems:

scalability: In Digital Experience Mesh, only the delivery services are responsible for delivering the content to the clients. Source systems are never called and their role is limited to notifying about the change. To handle more external traffic, more delivery service replicas need to be added. That can be done with a single command or automatically.

performance: Once again, the requests from clients never go beyond the delivery services. It means that there is always one call to get complete experience. I.e. data aggregation and rendering can be a part of the Service Mesh.

latency: Delivery services can be deployed to multiple geographic locations. Source systems are not accessed by the client requests and, as a result, do not affect the latency in the response.

availability: all the source systems can be down. The site operates as long as the delivery services are operating.

How StreamX solves the Request Scoped Components problems:

Request Scoped Components problem is mainly speed and scalability. StreamX comes with a Unified Data Layer built by high-performing services that can serve tens of thousands of requests per second and scale horizontally. Each of the services is single-purpose and is optimized for speed and scalability.

The recommended way of integrating StreamX-driven components with AEM is to leverage Edge Side Include (ESI) on the CDN. This way content authors can still control full page layout by placing StreamX powered components on standard AEM pages. Once the components are published and the page is rendered by AEM, CDN controls the composition. Alternative approaches to include StreamX components are Server Side Includes (SSI) or frontend calls. The last method is also a recommended way to leverage fully dynamic and personalized components like live searches.

StreamX-driven components integration

NOTE:

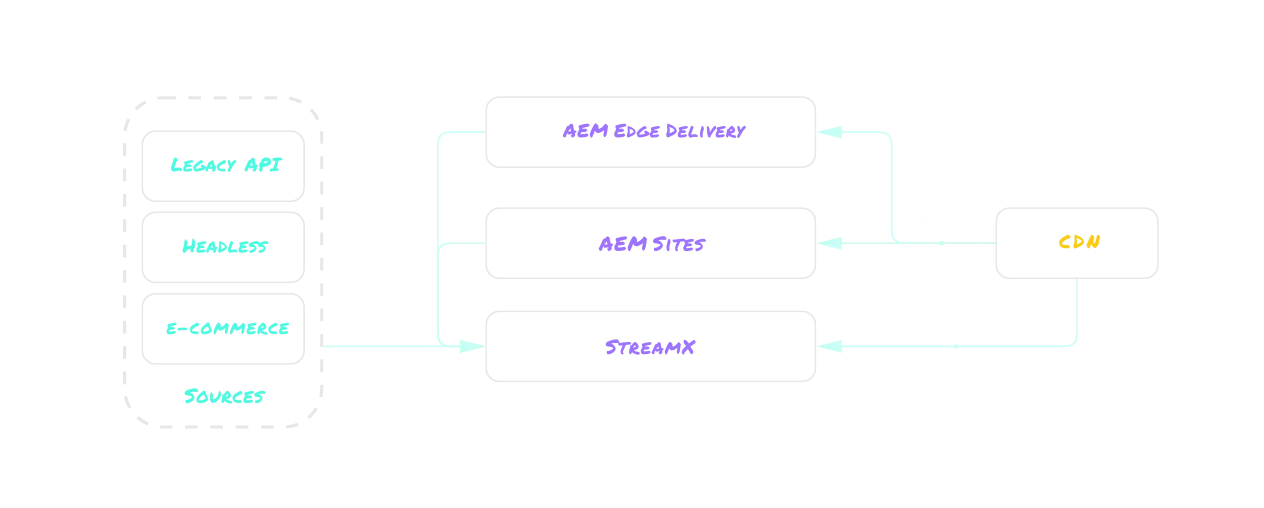

Combining both frontend calls and ESI enables the possibility to share the same components like Product Listings or recommendations between AEM Sites and Edge Delivery. This way the Unified Data Layer becomes a high performance and scalable single source of truth for a modern AEM stack.

The challenge of delivering personalized, dynamic experiences at scale within the AEM platform is still relevant. Caching mechanisms the architects used to leverage while building scalable and high-traffic sites struggle to keep pace with the need to utilize data-driven or personalized content.

StreamX bridges the gap between AEM's content management capabilities and the demands of modern digital experiences. Digital Experience Mesh empowers organizations to unlock a new level of performance and scalability for their dynamic AEM content.

Maximize Adobe Experience Manager’s potential and create a composable,

future-ready platform without time-consuming rebuild.

Book a StreamX demo to learn how.